Why small visual changes take so long in product design

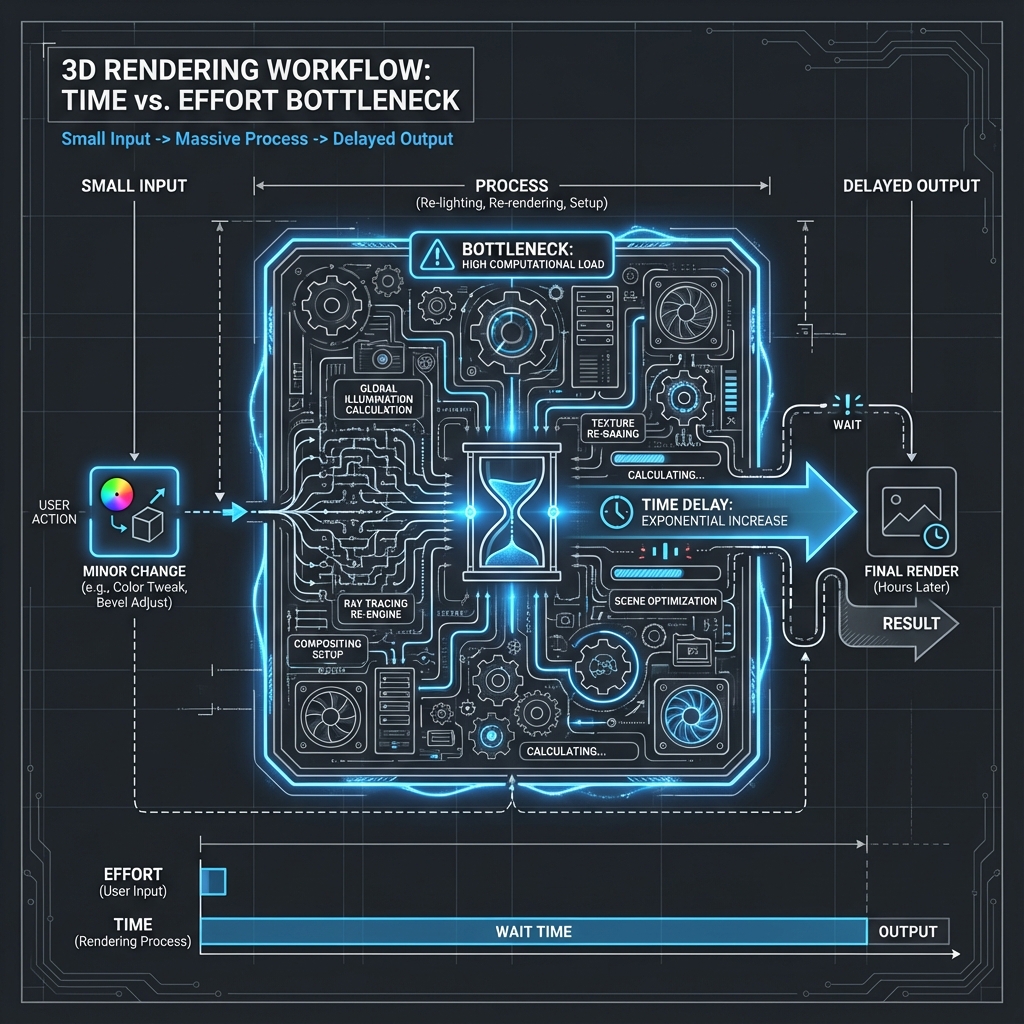

You change a material finish. It takes 10 seconds. But seeing that change in context takes 2 hours. Why?

This is the "setup tax" of traditional rendering pipelines, and it’s the primary reason product teams hesitate to iterate on visuals late in the process.

The Official Way: Linear Precision

When you visualize a concept in a standard high-fidelity renderer (like KeyShot, V-Ray, or Blender), the workflow is designed for physics, not speed:

- Import Geometry: Clean up NURBS, triangulate meshes.

- Assign Materials: Link textures, adjust roughness, transmission, and subsurface scattering.

- Setup Lighting: Rotate HRDIs, place physical lights to catch specific edges.

- Configure Camera: Set focal length, depth of field, and framing.

- Render: Compute light paths (ray tracing).

This linear process guarantees accuracy. It ensures that if you build it, it will look like the image. But it creates a massive penalty for "going back."

The Hidden Cost: The Setup Tax

The friction isn't the render time (computation is cheap); it's the setup time (human attention is expensive).

Every time you want to explore a "what if"—like "what if this handle was matte black instead of glossy?"—you can't just "paint" that change. You have to open the scene, find the material graph, adjust parameters, ensure the lighting still works with the new reflectivity, and re-render.

The Bottleneck: Small inputs (a bevel change, a color tweak) trigger disproportionately large process resets. This discourages experimentation. Teams settle for "good enough" because the cost of "let's try one more version" is too high.

The Insight: Accuracy vs. Flow

There is a fundamental difference between constructing a scene for a marketing asset and summoning a visual for a design decision.

- Marketing Assets require physical perfection. You need to know exactly how light hits the chamfer.

- Design Decisions require flow. You need to know "does this feel right?" immediately.

Using a final-grade renderer for decision-grade problems is like using a CAD suite to write a grocery list. It’s too heavy.

The New Model: Velocity of Intent

Some teams are now separating these workflows. They use heavy tools for final validation, but light, prompt-driven tools for the "messy middle" of iteration.

Imagine a workflow where "seeing" an idea is as fast as typing it. Instead of rebuilding a lighting environment to test a darker mood, you simply request it. You trade 100% physical simulation for the ability to generate 20 variations in the time it takes to set up one traditional scene.

This doesn't replace the final render. It unlocks the 90% of ideas that currently die because they are "too much work to set up." It allows the conversation to drive the visual, not the other way around.